Behavioral Agents

The concept of an “agent” has been foundational in AI for decades. At its core, an agent is an autonomous entity — typically software — that interacts with an environment. This interaction follows a recursive feedback loop:

- The agent takes an action in the environment.

- The environment changes in response to the action.

- The environment provides new state information to the agent.

- The agent uses this updated information to decide its next action.

- The cycle repeats continuously.

ACTION

STATE

Figure 1: Click the 'animate flow' button to visualize the typical feedback loop between an agent and the environment.

In the Figure 1, we use the simple game of tic-tac-toe to demonstrate the flow. In this case, the state is the current “X” and “O” positions on the board, and the action is the new position that the agent wants to place an “X”. However, if we start to think about other environments, a natural question arises:

Can state and action be anything?

Technically, yes. However, exploring the implications of this flexibility leads to deeper insights.

Are LLMs Agents?

In recent years, the term “agent” has been widely used — sometimes in ways that blur its original meaning. Many now assume that a large language model (LLM) is inherently an agent. While an LLM can be used as part of an agent — such as scraping a Twitter feed (state) and generating a tweet (action) — the underlying LLM itself does not function as an autonomous agent.

Environments that use natural language as the primary method of interaction are a perfect fit for LLM-based agents (e.g. Twitter), but can these same agents be used in other environments like games?

LLM Agents in Games

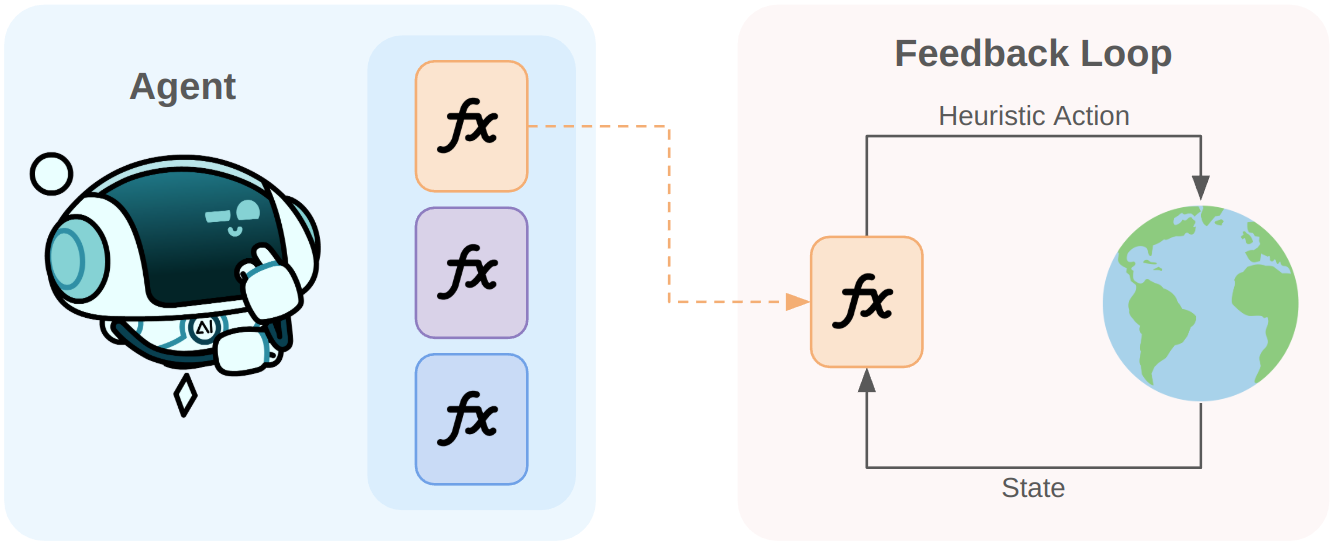

To play games well, an agent would have to implement a strategy which involves solving a set of subtasks in a given game. Most LLM-based agents that we see nowadays do not learn strategies (there are some notable exceptions). Instead, they operate by selecting from a predefined set of functions. These functions typically consist of a set of pre-programmed rules (heuristics).

In contrast, behavioral agents learn to select actions frame-by-frame (Figure 1), adapting dynamically to their environment. Generally speaking, this is not possible with LLM-based agents given the inference time and network latency. Specialized behavioral agents are orders of magnitude smaller, which means they can run locally and perform inference in a fraction of the time. Thus, the main advantages of behavioral agents are:

Flexibility: They are not constrained by developer-imposed heuristics and can learn any set of strategies

Dynamic: They can adjust their actions on a frame-by-frame basis instead of waiting for a heuristic termination condition

LLM as a Controller?

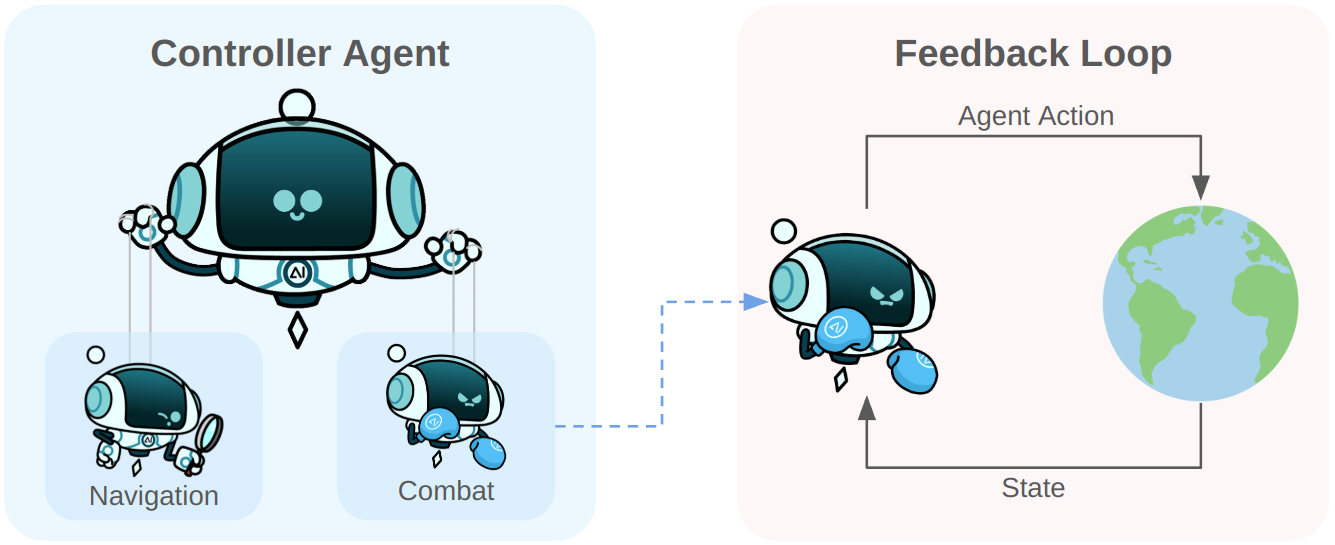

In order to bridge the gap between selecting hardcoded functions and fully adaptive agents, we can use an LLM-based agent as a high-level controller to select behavioral agents.

While this approach is an improvement on the prevailing method used by LLM-based agents, it has some limitations. This approach is still subject to relatively infrequent decisions from the controller agent (due to latency), which limits how dynamic agent selection can be.

Conclusion

The definition of an “agent” in AI is evolving, but it’s important to distinguish between systems that learn behaviors dynamically and those that rely on pre-defined heuristics. While LLMs are powerful tools that can be used as part of agent-based systems, they are not agents in themselves unless coupled with mechanisms that allow them to interact continuously with an environment.

Behavioral agents, particularly those that operate at a low level and make decisions frame-by-frame, offer a level of flexibility and adaptation that rules-based systems cannot achieve. As AI continues to advance, we may see more hybrid approaches where LLMs serve as high-level controllers, selecting from learned policies rather than static rules.

Ultimately, understanding these distinctions helps in designing better AI systems—whether for gaming, automation, or more complex decision-making tasks in the real world.